As I said in my last post, I recently moved. Which mean that all the work I did in my last apartment is gone. That was mostly physical infrastructure work, particularly the cabling. So now I get to do it again; joy!

But before I get into the new place, I should visit some topics from the past. An update of sorts. Just because I haven’t posted in a year doesn’t mean the homelab has sat untouched for a year.

UPS Delivered!

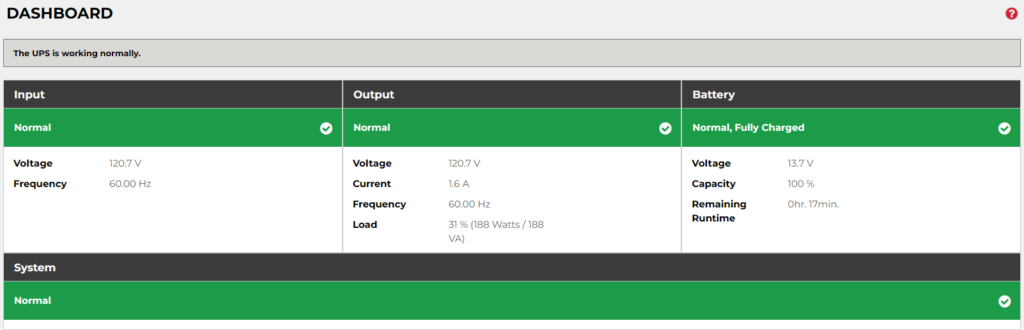

Back in April, I finally bit the bullet. I bought a “CyberPower PFC Sinewave Series CP1000PFCLCD – UPS – 600-watt – 1000 VA.” I wanted something that would communicate with ESXi to initiate a “semi-graceful” auto-shutdown if wall power was lost.

I say “semi-graceful” since with my setup, I only have ~17min of battery life. That covers three devices: my server, my Unifi Secure Gateway (USG), and a 5-port Unifi Flex-mini Switch.

Via the accompanying PowerPanel software, I can monitor battery status, while also configuring the shutdown behavior in for ESXi. I actually have PowerPanel installed as a separate VM in ESXi. Probably not the best idea, but it works.

Back to the semi-graceful shutdown, VMs sometimes take time to properly shutdown, especially Windows Server. So between ESXi and PowerPanel, I give some time for the VMs to gracefully shutdown. But if they don’t shutdown in time, then ungraceful shutdown of VMs occur, before ESXi gracefully powers down.

Took a bit of testing to get it all figured out, but it does work. At my last apartment, there were a couple of brief blackouts or brownouts. The UPS did exactly what it needed to do.

My (Home) Assistant Quit on Me

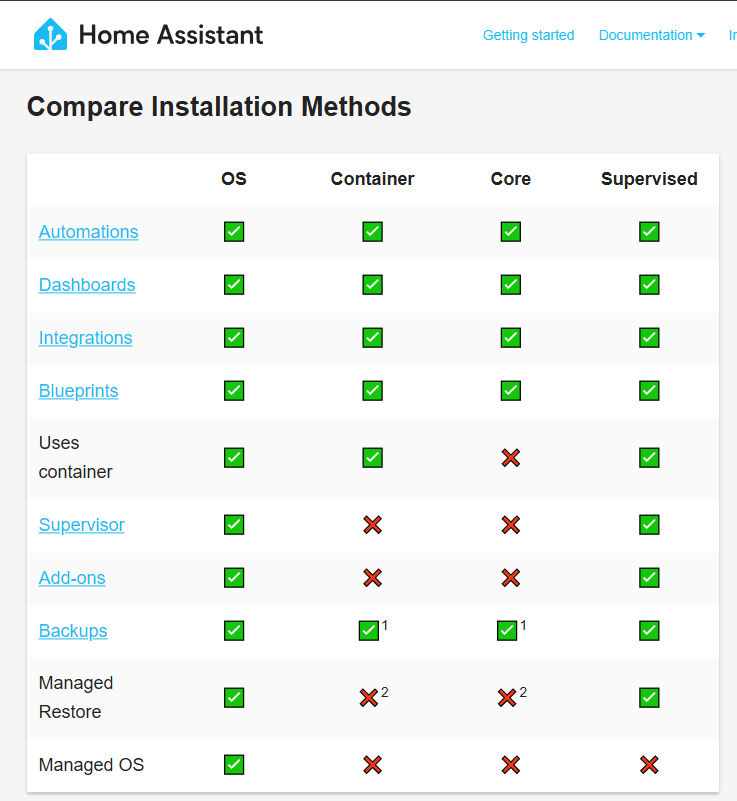

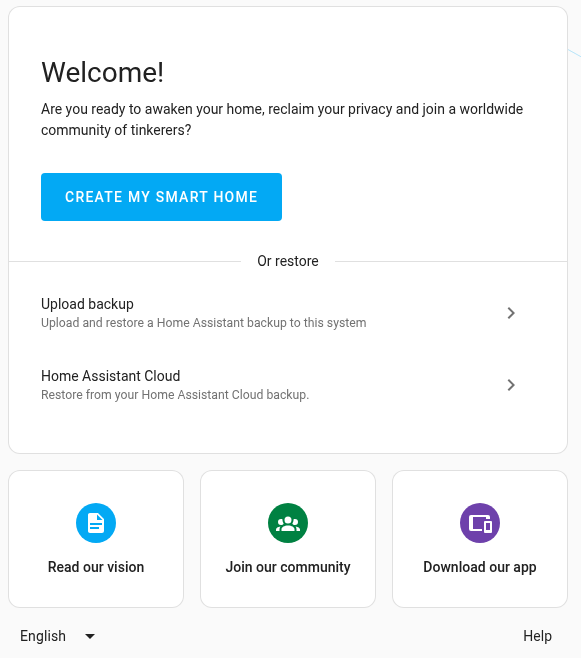

The very last post of 2023 was me futzing around with Home Assistant. I had a “concept of a plan” to automate some of my smart home devices.

After getting it installed, though, I didn’t really do much more with it. Stupid, I know.

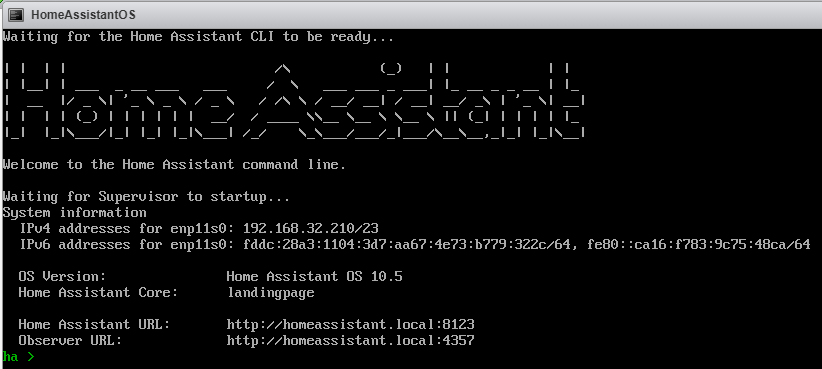

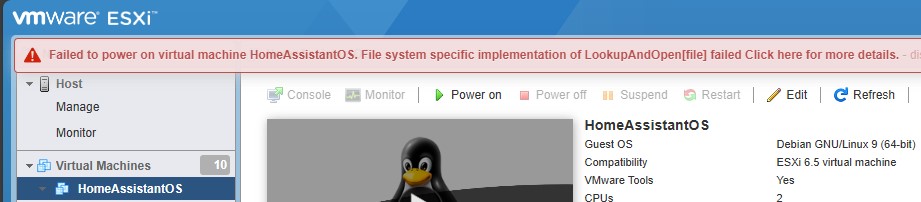

Unfortunately, at some point towards the end of 2023 or beginning of 2024, the HAOS VM bit the dust.

I don’t exactly know what happened, or when it happened. But I know there was a power outage one night. A storm, I think. Before I had my UPS…

I can’t say for certain the power outage was the reason. After all, it was at least a couple weeks later that I realized HAOS wasn’t turned on. When I tried turning it back on, I got that message, and have ever since.

I did look into this error message a little bit. But I think reinstalling HAOS is the better choice. Especially since I didn’t do any further setup anyway.

Glad I have the UPS now 🙂

Playing with Proxmox

Over the last year or two, the big news in the virtualization space is VMWare selling out to Broadcom. And Broadcom absolutely trying to squeeze out every last penny in licensing. Recently, AT&T disclosed that Broadcom was seeking a 1050% price increase on licensing.

I’m still using ESXi/vSphere 6.5 U3 on older than 10yrs old server. Which is fine, but at some point I’ll need to replace the hardware and software. Unfortunately, there are no more perpetual, free licenses for non-commercial purposes. I never even got a 7.0 license.

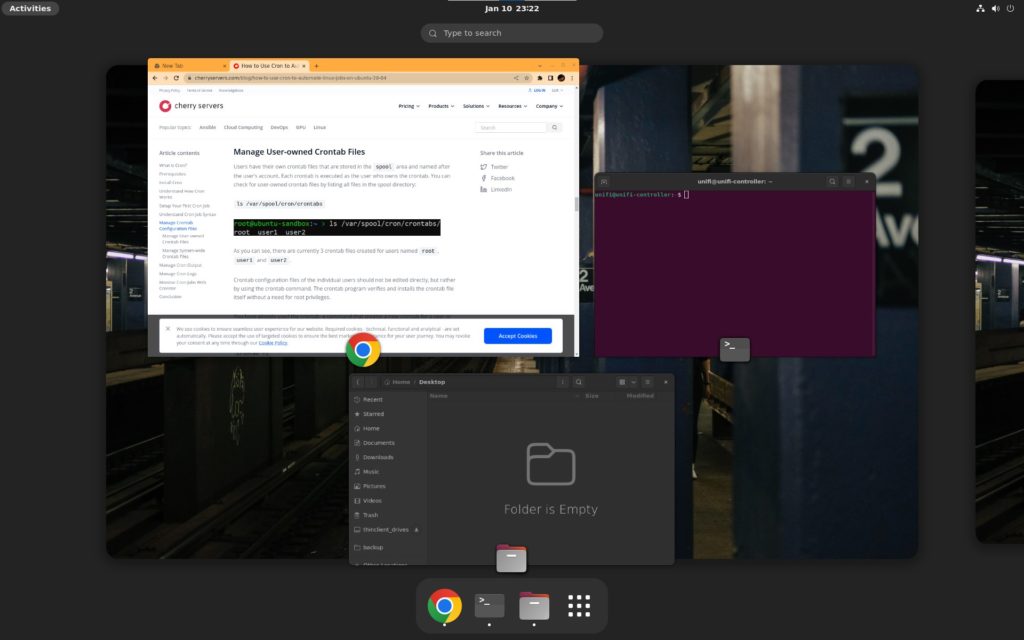

With that in mind, I thought it’d be interesting to play with Proxmox, which is a FOSS virtualization platform. As such, I installed it on another server I had lying around. Unlike ESXi, Proxmox is nowhere near as user friendly. And the documentation that’s available is pretty poor, in my opinion.

That’s one of the reasons FOSS sometimes annoys me: it’s often not very accessible to anyone who’s not already an expert. But that’s a topic for another post.

The first thing I wanted to do was connect my current NFS-based OS ISOs storage to Proxmox. This way, I wouldn’t have to use extra drive space on the new Proxmox server by copying over an ISO. If the data exists on the network, use it! This NFS share is hosted on my primary Windows Server VM.

I was able to point Proxmox to the NFS. However, Proxmox wanted to use its own directory structure within that NFS. I found that rather annoying. This wouldn’t be where the Proxmox VMs live, after all. It’s simply where the ISOs are. Why should I have to rearrange the directory structure and files just for Proxmox?

I honestly don’t remember if I created a VM after all that. I don’t remember if it worked or not. But given the situation with VMWare, that won’t be the last time I play with Proxmox.

Let’s Get Physical, Physical (Again)

The last thing to report is minor, but worth mentioning. I ended up adding two more Ethernet runs. The important one being from one room to another, underneath the carpet and along the baseboards. Ah, the joys of apartment living.

Anyway, that’s not that big of a deal. I had already done it once, after all.

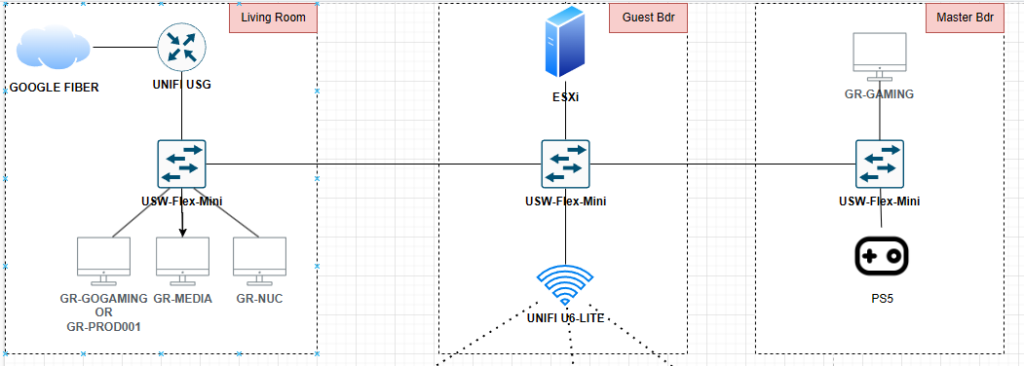

Rather, it was the idea that led to this. In my old apartment, the Google Fiber jack (ONT) was in the living room. The guest room down the hall served as the “server closet.” The server, Unifi AP, main switch, UPS, and other devices were in the guest room. But the Unifi Secure Gateway (USG) was in the living room, since the Internet point of entry was there. Which seemed strange to me. I wanted all the main gear in the guest room.

It’s hard to explain without a map or diagram, so I’ll use some. This is the diagram of my original layout:

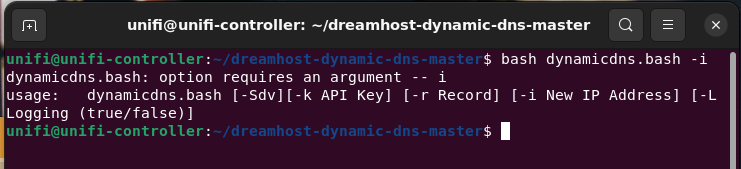

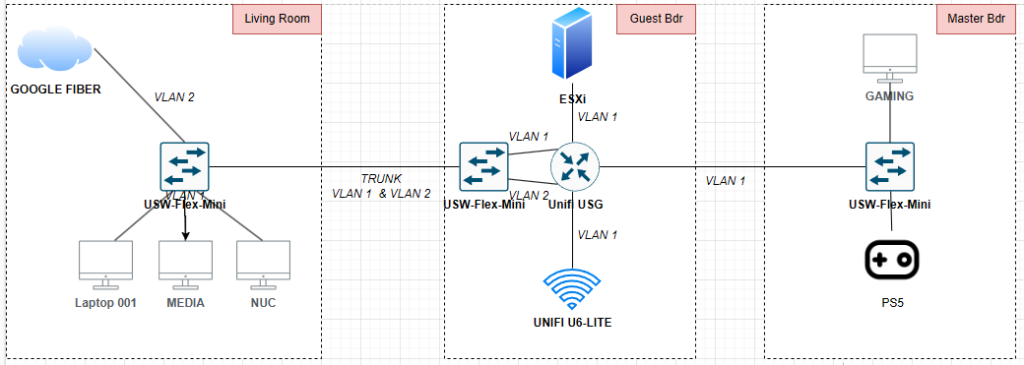

To move the USG to the guest bedroom, there were two ways to achieve this. One was by adding a second run from the living room to the guest bedroom. One run would connect the fiber jack to the WAN side of the USG. The other run would connect from the LAN side of the USG back to the switch in the living room.

But I wondered if it was possible to do this:

Essentially, could a WAN connection go through a VLAN? Because if it could, I wouldn’t have to run another cable. I looked it up and even asked on reddit. And the answer was yes, this is entirely possible and not that unusual!

Unfortunately, when I tried to do this, it didn’t work. It even caused me some more issues with the Unifi controller being inaccessible while it wasn’t working.

In the end, I just laid a second down a second Ethernet run. Maybe I gave up too quickly, but sometimes the easiest solution…is simply the easiest solution 🤷♂️

So that was last year in the life of the homelab. Not as much as I wanted to do, but it was at least something. And that’s the point, right? To at least play around with it and learn something.